I finally switched over from Chrome to Firefox, after switching away from the latter over 12 years ago. I’d basically given up on any shred of privacy I might have left on the internet, but the final straw for me was Chrome totally bypassing the DNS blocklists on my PiHole (╯°□°)╯︵ ┻━┻

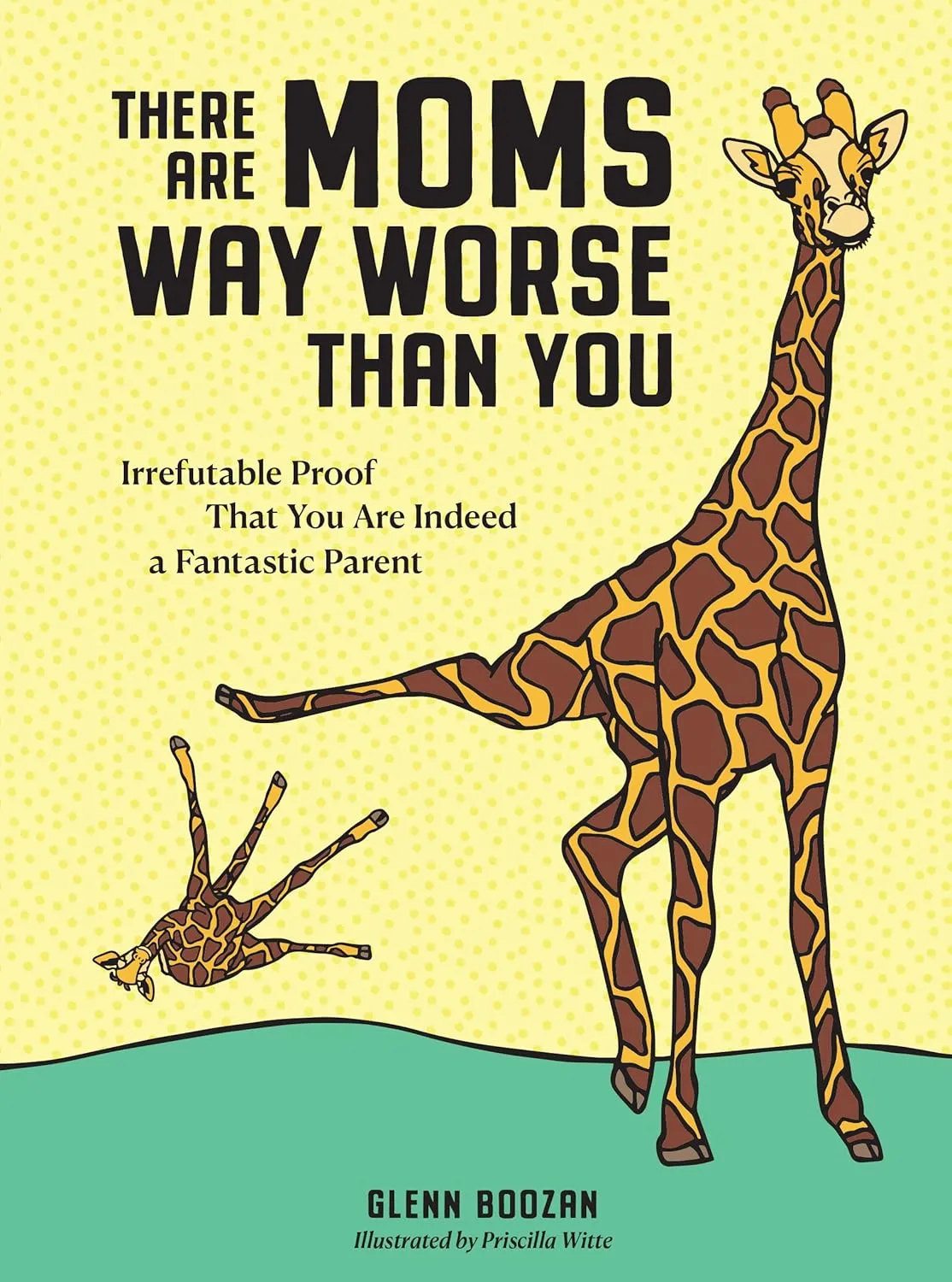

Unsurprising, really. You’re encouraged to read this comic (PDF) on the company’s intentionally odious practices.

The usual argument is “why’re you complaining about something that’s free?” Because I deem privacy to be a fundamental right that is to be respected even if you’re giving shit away. We can gripe about its fundamentalness but can perhaps agree that “Privacy means people know what they’re signing up for — in plain English, and repeatedly.” There’s nothing clear about this with Chrome. It’s not hard to quit doing sneaky and evil things without peoples’ informed consent.

The Good

Transferring bookmarks (of which I have very few) and history was a breeze.

Most extensions I’ve depended upon in Chrome are available for Firefox. There appear to be ways to get Chrome extensions to work in Firefox but I haven’t needed them. Pure nostalgia: I was reminded of the Web Developer extension by Chris Pederick which I starting using in 2004 (I think) to live-edit with CSS (which I thought was just magical, in addition to being a giant time-saver). It’s still around and is still fantastically useful. And available for Chrome as well. lol.

Developer tools, which I need for my job, are mostly the same but I found myself preferring the Firefox DevTools a little more for aesthetic/ergonomic/design reasons.

Picture in Picture is excellent.

Preventing YouTube and other websites from autoplaying videos is excellent.

The Okay

Syncing is P2P, not centralized, and not as elegant and “Just Works™” like with Chrome. But it’s mostly the small things. Like how toolbar layouts are not synced, and how switching the default search provider on your desktop won’t change it on your mobile device. Not a deal-breaker in the least.

On a Mac, the Emoji entry shortcut ( Ctrl+Command+Space) doesn’t work. For the amount of emojis I use in my personal communications, this is far more annoying than the syncing issues.

AirPlay doesn’t work. Never worked on Chrome either. So whatever. Use Safari.

The Bad

None. It’s a fantastic browser.

Other Stuff

So why not Safari? Extensions. That’s really it. It’s a very limited ecosystem and some things I really need aren’t available for Safari. I suppose I could use two different browsers for work and play but I’m not there yet.

While I do use a PiHole, I’d recommend Privacy Badger and uBlock Origin to people switching away. Maybe even add NoScript to the mix. I believe Facebook Container is installed by default. The adversarial/defensive relationship we have with the internet feels bit sad to me as a 90s kid who still remembers its magic and promise but that’s how it is.

You can see where Firefox stores your profile via Help → More Troubleshooting Information → Profile Folder

I’ve configured all installations on my laptops and phone to use DuckDuckGo as the default search engine.

Update: December 23, 2025

“Hello, We’re Firefox, The Only Browser That Hasn’t Hit Itself In The Dick With A Hammer. For years now, folks use us because of our un-hammered dick. Now, you may be wondering why today we’ve brought this hammer and pulled out our dick. Well I’m glad you asked-”

@TheZeldaZone

Now the CEO claims that there will be an AI “kill-switch” but I do not see why this is unnecessary garbage is on by default (thinking of my parents here).